In March I posted a series of blog posts on my paternity leave MepSQL project, which I called MepSQL. There was still one piece created in the MepSQL buildsystem that I didn't publish or blog about. Since it is generally useful, I wanted to generalize and polish it and publish it separately. I finally had that done last week, when I also found that somebody else, namely alestic.com already published a similar solution 2 years ago. So yesterday I ported my BuildBot setup to use that system instead and am happy to publish it at the Open DB Camp 2011 in Sardinia.

Ok, so let's go back a little... What is the problem we are solving?

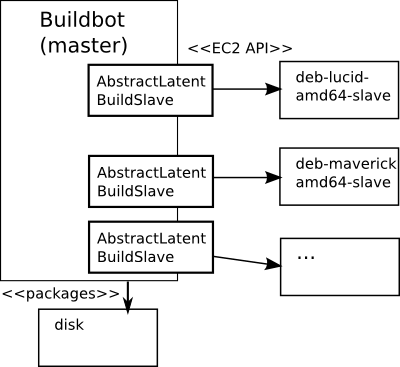

Let's refresh our memory with a picture (and you can also go back and read about it):

We've used the EC2LatentBuildSlave class to make our BuildBot system EC2 aware. Instead of running a fleet of BuildBot servers up 24/7, we have a "latent" buildbot class that knows how to start and stop EC2 instances on demand. We only pay for what we need, and we only use the electricity we need. This is very convenient and the cloud is just in general a very good solution for batch computing like continuous integration and building packages.

The one thing that Kristian often mentioned as the major drawback of the MariaDB build system - and I quickly realized this is even more true in EC2 - is the management of your virtualized slave servers. You need to prepare an image which has installed and configured both the BuildBot software as well as the build dependencies of your own project. In MariaDB they had over 70 images last year, now there's even more. (You have several for each version of Linux, Windows, Solaris, etc. that you support.) If you ever need to make a change to those, it is a lot of manual work! For instance, when MariaDB added the open graph engine, they had to link against the boost library. This meant starting dozens of virtual servers, installing boost on all of them, then saving all the images. (I don't know, I assume that's what how they do it...)

On Amazon this is even worse, because you cannot edit images. You can update your system, yes, but you can not save it back to the same image, you have to create a new image. So you get a new AMI id. Which you need to copy to your buildbot scripts. It's like badly tasting medicine: you sometimes have to, but really you don't want to use it.

Fortunately there is a solution!

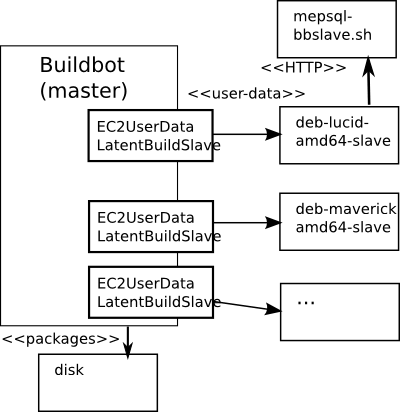

When starting an instance, Amazon EC2 allows you to specify a text string called "user data", which you can read from inside the instance via http, using wget or curl. In fact, you can also get all kinds of meta-data, like the AMI ID, instance id, hostname and ip address that belong to this instance.

Ok, great, we can actually send some parameter-like info to the instance. Now what?

The Alestic.com people built on this idea, and created modified Ubuntu images that use user-data in a clever way: If the user data text starts with a "#!" it is assumed to be a script and executed. (Otherwise, nothing special happens, but you may of course use the user data for yourself for other purposes.)

In practice it turns out a good usage pattern is to use a short and generic script for the user data field, and then maintaining the actual scripts on some web server you own. Alestic even created a nice utility "runurl that makes it easy to run remote files as local shell scripts.

Note that official Ubuntu EC2 images nowadays contain the init script that does this, and Alestic links to Debian images that does this too. For other operating systems (CentOS, RHEL...) you need to create and AMI yourself which is basically just the vanilla AMI, plus this init.d script.

To do this specifically with Buildbot, I made a simple wrapper subclass EC2UserDataLatentBuildSlave that is just using the original EC2LatentBuildSlave and in addition takes some arguments from your BuildBot configuration that is used to create a short shell script for the user_data parameter. You pass on parameters about how the BuildBot slave should connect back to the master, and a URL to a remote shell script that installs buildbot, bzr and whatever other build tools you have (apt-get build-dep mysql-server in my case).

The gist of it is here:

# Note: With userdata bootstrap slaves, we don't need to manage slave

# passwords, we can just generate one and send it to the slave.

import random

password = str(random.randrange(10000000, 99999999))

# The following script will be passed as user-data to the new EC2 instance.

user_data = """#!/bin/bash

set -e

set -x

# Log output (https://alestic.com/2010/12/ec2-user-data-output)

exec > >(tee /var/log/user-data.log|logger -t user-data -s 2>/dev/console) 2>&1

# Get the runurl utility. See https://alestic.com/2009/08/runurl

wget -qO/usr/bin/runurl run.alestic.com/runurl

chmod 755 /usr/bin/runurl

# Run the mepsql-bakery bootstrap script

export BB_MASTER='""" + bb_master + """'

export BB_NAME='""" + name + """'

export BB_PASSWORD='""" + password + """'

runurl """ + bootstrap_script + """

"""

EC2LatentBuildSlave.__init__(self, name, password, instance_type, ami,

valid_ami_owners, valid_ami_location_regex,

elastic_ip, identifier, secret_identifier,

aws_id_file_path, user_data,

keypair_name,

security_name,

max_builds, notify_on_missing, missing_timeout,

build_wait_timeout, properties)

You can find the whole ec2userdatabuildslave.py here along with an example shell script example-bbslave.sh that you could put somewhere and use as the value for bootstrap_script.

As you can see, an unplanned but nice side benefit was that configuring your buildslaves on the fly like this, you don't need to maintain a list of usernames and passwords, you can just generate a password on the fly and pass it to the buildslave as part of the userdata. Another piece of administrivia just went away!

Using the above approach proved very beneficial in the development phase. Whenever I needed to tweak my buildslaves, instead of creating a completely new set of AMI id's, I could just add a single line to the remote runurl script and the problem was solved! Working with any task in the cloud - especially if you need to work with multiple different images, just became so much more enjoyable.

PS: This was posted to planet drizzle purely as a FYI for Monty Taylor and Patrick Crews.

PS 2: This implements feature request #418549 in Launchpad (Launchpad itself).

- Log in to post comments

- 24924 views