This is the third post in a series about developing the MepSQL build system. In the previous posts we chose BuildBot running in the Amazon EC2 cloud. In this post we actually get a closer look at how the packages are being built (to be followed by even closer looks in later posts :-)

One of the things missing when you fork MySQL is the build system. (The other main missing component being the manual.) It is possible for anyone to compile MySQL from source, but the actual build system (scripts, testing, etc) used by MySQL itself is not public. The same is true for the automated testing. MariaDB uses the open source tool called BuildBot for both of these tasks - in this post we are mainly concerned about the building of packages. Actually, we are mainly concerned with BuildBot itself and the details about building packages is saved for a later post. I will document both the MariaDB system and the MepSQL system which was derived from that.

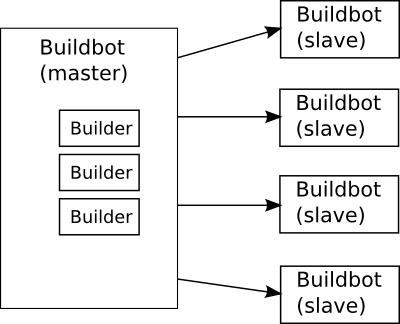

A classic BuildBot installation

A classic BuildBot installation is a normal master-slave system:

You setup one server to act as the BuildBot master. This host has the entire configuration of your BuildBot system - how many slaves are there and what are they called, what jobs to run on them, etc. Typically the master will monitor your source code repository so that a commit automatically also triggers a test run. Finally, the master provides a web interface with some nice reports of whether tests and builds succeeded or not.

You then add as many slaves as needed. Slaves don't have any other configuration than knowing at what address they find the master which they should connect to.

The jobs to be run are called Builders. They are defined in the master configuration file, yet they actually run on one or more slaves. You can run one builder on all slaves, or different builders on different slaves - as you wish. (For instance, the Builder that builds DEB packages is only run on Ubuntu and Debian slaves, as anything else wouldn't make sense!)

Btw, it is the same BuildBot software running on all of those hosts, whether one is a master or a slave is purely a configuration issue.

Some of the benefits of this system are:

- Distributed computing across the many slaves while being managed from a central place.

- Easy to add slaves representing different operating systems, different versions of them, different hardware platforms and different compilers to get good test coverage.

- "Crowdsourcing": Developers can install BuildBot on their desktops so that the slave can run jobs on them when the desktop is idle. Also community members can provide spare servers - this is a nice way to contribute to an open source project if you don't have the time or knowledge to write code.

How MariaDB uses BuildBot

The MariaDB BuildBot configuration is stored in the Launchpad repository mariadb-tools. It is one big file of python code.

This is one thing one could criticize BuildBot for: It took me about 2 long nights to digest and understand the MariaDB config, yet I had the advantage of actually knowing what it does. I personally like the method of having one text file for configuration, but some practices could be developed for instance to break out smaller files which are then included into the master config file. For instance, the configuration of Builder classes ends up being many lines of shell scripts, these could be a separate file per Builder, then it would be easier to digest everything.

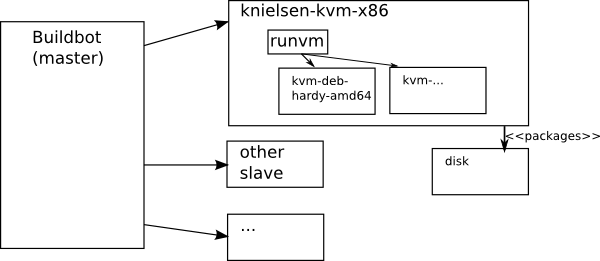

Anyway. MariaDB uses a classic, non-cloud BuildBot setup for testing. (documented here) But for building packages they take a different approach:

There's one slave knielsen-kvm-x86 that builds all the packages. To do that, this slave contains about 70 different KVM virtual images of various 32-bit and 64-bit operating systems. Some are used to build packages, some for testing them.

A utility script called runvm makes it simple to use these virtual environments. This shell script takes as arguments the parameters of which image to launch, then makes an ssh connection into the image and executes commands that it reads from stdin. So the commands used to build mariadb packages are piped via the runvm utility into the environment doing the build. There's also an escape character that makes the command execute on the host side instead.

An example of using runvm from a Builder is how the src tar file is built. (The tarbake51.sh script is part of the ourdelta-derived set of build scripts, the topic of a further blog post.) Towards the end you see how some scp commands take care of moving the result files out of the KVM image into the knielsen-kvm-x86 BuildBot slave.

864 f_kvm_tarbake_jaunty_x86.addStep(Compile(

865 description=["making", "dist"],

866 descriptionDone=["make", "dist"],

867 timeout = 3600,

868 command=["runvm", "--port=2223", "--user=buildbot", "-cpu=qemu64", "--smp=6", "--logfile=kernel_2223.log", "/kvm/vms/vm-jaunty-i386-deb-tarbake.qcow2",

869 WithProperties("""

870 set -ex

871 mkdir -p buildbot

872 cd buildbot

873 rm -Rf build

874 bzr co "%(bakebranch:-lp:~maria-captains/ourdelta/ourdelta-montyprogram-fixes)s" build

875 cd build

876 bakery/preheat.sh

877 echo bakery-[0-9]* > bakery.txt

878 tar zcf $(cat bakery.txt).tar.gz $(cat bakery.txt)/

879 cd $(cat bakery.txt)/

880 bzr branch --no-tree "lp:~maria-captains/maria/%(branch)s" local-branch

881 bakery/tarbake51.sh %(revision)s local-branch

882 basename mariadb-*.tar.gz .tar.gz > ../distdirname.txt

883 mv "$(cat ../distdirname.txt).tar.gz" ../

884 """),

885 "= scp -P 2223 buildbot@localhost:buildbot/build/distdirname.txt .",

886 "= scp -P 2223 buildbot@localhost:buildbot/build/bakery.txt .",

887 "= scp -P 2223 'buildbot@localhost:buildbot/build/mariadb-*.tar.gz' .",

888 "= scp -P 2223 'buildbot@localhost:buildbot/build/bakery-*.tar.gz' .",

889 ],

The packages are then stored in a specific directory on knielsen-kvm-x86. How they travel from there to the download mirrors is out of scope for the buildbot script. Note that this is atypical for a buildbot system: You'd normally expect any results of the process to be stored back on the master (as happens with MepSQL, see below). Here the slave producing packages has it's own life inside the larger fleet of slaves, the rest of the system having nothing to do with production of packages.

After this, the KVM image is shutdown and the disk discarded. The next run starts from a clean slate. This is a nice advantage of the virtualization approach: You are guaranteed to have a clean environment for each run. Sometimes a QA environment can get "cluttered" with artifacts remaining from previous runs, that then produce bugs in some later runs, and this is very annoying to live with. The same advantage extends to testing: You can do a test install of your package in a different environment that is clean of all build tools and such, so you know that the packages really contain everything an end user will need, dependencies are correct, etc.

The other big advantage is ease of maintenance: When you need to add new versions of Linux distributions, you just add more generic images to the collection, but you don't need to install a buildbot slave into each and every one of them.

On the other hand, maintaining the images is still a laborous task that the MariaDB documentation complains about. If at any point you need to change the images - such as adding new build dependencies (for instance the new OQGRAPH engine requires the boost library), then you have to install it into quite a few images!

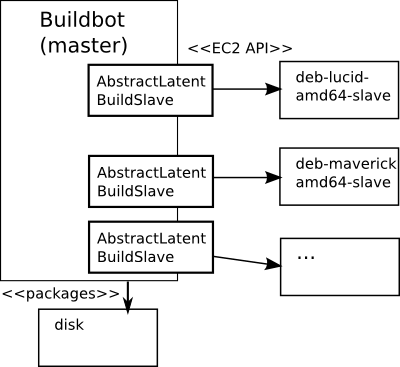

How MepSQL uses BuildBot in the EC2 cloud

The MepSQL BuildBot configuration is found in the Launchpad project MepSQL Bakery. The "bakery" terminology is inherited from the OurDelta scripts (used by MariaDB scripts, used by MepSQL scripts), where the building of backages is referred to as "baking" them. It also contains the new loitsutbuildslave.py class which is just a simple extension of the brilliant ec2buildslave.py that comes with BuildBot.

When choosing to do this project in the EC2 cloud, I knew I would not be able to copy the MariaDB based system as such. The runvm approach is based on the idea of running many KVM images inside one buildbot slave, and there are several lines of code hardwired around this assumption (such as scp into a port on localhost). But on a cloud you are already running inside virtual servers and you cannot and there is no point in running virtual instances inside your server. Instead, EC2 provides a REST API and command line tools to launch more servers easily.

This is where I found the brilliant EC2LatentBuildSlave class that already exists in BuildBot to solve this same use case. It was developed by Canonical who apparently use EC2 for their own purposes too.

EC2LatentBuildSlave is a subclass of AbstractLatentBuildSlave. It acts like the standard BuildSlave class, and essentially lies to the BuildBot master about the slave being connected and idle, while in fact it only starts up new EC2 servers on demand, when a new build is started. It should be noted that this merely saves resources on EC2 - and thus money - without this I could still use EC2 instances as slaves and start them manually as needed, or keep them running all the time (which would be costly).

What I like about how the MepSQL BuildBot setup ended up is the fact that it more closely resembles the classic BuildBot setup. In fact, it would be trivial to migrate the MepSQL system back to a classic non-cloud BuildBot setup, since the same configuration file could be used (almost) as is. All of the virtualization related stuff is abstracted away into the EC2LatentBuildSlave class' methods start_instance() and stop_instance(). (In MariaDB the runvm stuff is hard-coded into the build scripts.)

In what was done so far the main problem from the MariaDB setup remains: If I need to adjust one or all of the AMI images, I need to manually start each of them, install the needed new stuff or make changes, then take new snapshots to create new AMI images. In fact it is even worse: in MariaDB they can at least overwrite the previous KVM images whereas on EC2 I end up with a set of new AMI id codes, which need to replace the old AMI id's in the BuildBot configuration file. There's even a Launchpad bug (or feature request) about this.

To solve this problem I created a new system to configure the AMI images on the fly when they are booting up. I've christened this system "loitsut" or "loitsut scripts". It will be the topic of its own blog post later. (I need to actually upload the code to the Launchpad project first.)

Portability

As I've pointed out in previous blog posts, I always put in a lot of thought to the issue of reusability of code created in the MepSQL project. I think it is important that we as much as possible avoid duplicating work between the competing MySQL forks, especially in mundane areas such as build scripts. Other layers of the build system have also seen new innovations in this regard.

In this case the result is less than perfect, since the MepSQL and MariaDB build systems are not interchangeable as they are now. However, this is more due to the runvm solution used at MariaDB than anything done on the MepSQL side. In fact, the AbstractLatentBuildSlave pattern in BuildBot could be used by MariaDB too, in which case the rest of the code is interchangeable.

This is the power of using object oriented programming! With implementing just two methods, the same system could be used:

- In EC2, Rackspace or any other cloud.

- In a private cloud. (Since they support the EC2 API, this is a given.)

- Using your own scripts (like runvm) to launch virtual images on your own servers.

- Just a classic BuildBot master-slave setup without any virtualization involved.

So it is still relatively portable, just that for #3 you need to implement two methods of your own.

- Log in to post comments

- 24363 views